Instance Normalisation

Instance Normalisation

Easy:

Imagine you are in a drawing class with your friends, and everyone is drawing a picture of an animal. Some of your friends are really good at drawing and make super-detailed and colorful pictures, while others are just learning and draw simpler, less vibrant images.

Now, your teacher wants to hang all the drawings on a wall for everyone to see. But there’s a problem: the super-detailed drawings are very bright and stand out a lot, making the simpler drawings look too dim in comparison. To make it fair and so that all drawings look nice together, the teacher decides to adjust the colors in each drawing.

For each drawing, the teacher looks at the colors in all the drawings and makes sure the bright colors aren’t too bright, and the dim colors aren’t too dim. He does this by comparing each color in a drawing to the average color of all the drawings. If a color is much brighter or dimmer than average, he tones it down a bit. This way, all the drawings look balanced and can be shown off without one overshadowing the others.

In deep learning, something similar happens with something called “Instance Normalisation.” Computers look at lots of pictures or data to learn how to do things. But just like the drawings, sometimes the data has really big numbers and really small numbers, making it hard for the computer to learn evenly. So, we use Instance Normalisation to help balance the data, making sure that all the important bits can be learned equally well. This helps the computer get better at whatever it’s trying to learn, just like how making all the drawings the same level of brightness and color helps everyone see how well each artist is doing.

Another easy example:

Alright, imagine you have a bunch of colorful LEGO bricks, and each color represents a different part of a picture that you want to build. Now, let’s say you’re building two different pictures: one is of a sunny beach, and the other is of a snowy mountain. The beach picture has a lot more bright colors, like yellows and blues, while the snowy mountain has a lot of whites and grays.

In deep learning, when we’re teaching computers to understand pictures (or anything, really), we want them to learn what’s important no matter if it’s a sunny beach or a snowy mountain. But just like how it’s easier to see the details of the LEGO picture when the colors aren’t all over the place, computers find it easier to learn when the colors (or the information in the pictures) are organized in a way that’s not too different from each other.

Instance Normalization is like making sure that no matter if we’re building a beach or a mountain, the LEGO bricks are organized in a way that makes it easy to see the important parts of the picture. It’s a trick we use to adjust the colors (or the information) in each picture so that they’re easier for the computer to understand. It’s like saying, “Computer, pay attention to the shapes of the bricks and how they fit together, not just the colors.”

So, in simple terms, Instance Normalization is a way to help computers learn from pictures (or data) by making sure that the differences in colors (or information) don’t confuse them, making it easier for them to see what’s really important. It’s a bit like putting on special glasses that let you see the details of the LEGO picture better, no matter if it’s bright or dark.

Moderate:

Instance Normalization (InstanceNorm) is a technique used in deep learning, particularly in the training of neural networks, to improve the performance and stability of the model. It is especially useful in tasks involving style transfer and generative models. Here’s a more detailed explanation:

What is Instance Normalization?

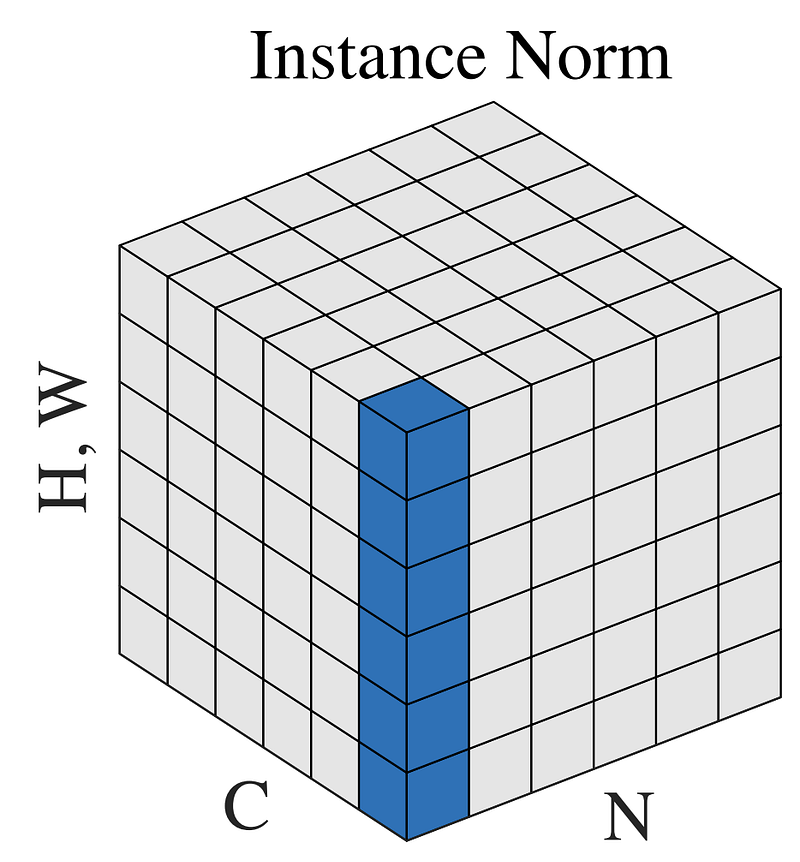

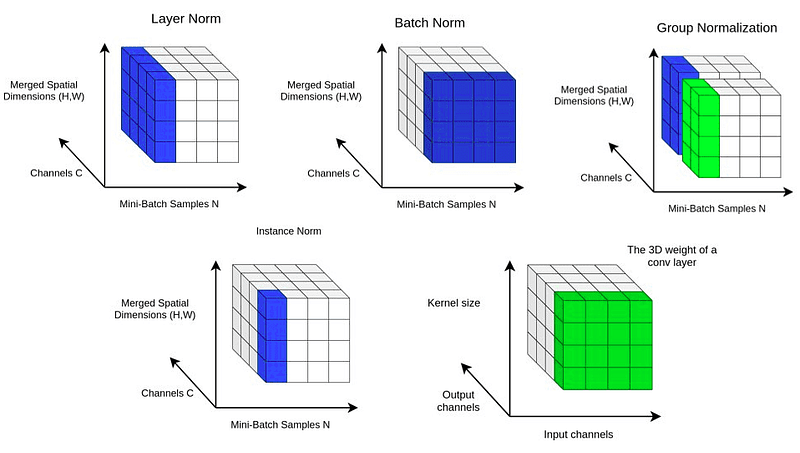

Instance Normalization is a method that normalizes the features of each sample (or instance) individually within a mini-batch. It’s a type of normalization similar to Batch Normalization but operates on each instance separately rather than across the entire mini-batch.

Why Use Instance Normalization?

Normalization techniques are essential in deep learning because they help in:

- Stabilizing Training: By normalizing the inputs to each layer, the model’s training becomes more stable and faster.

- Reducing Internal Covariate Shift: It helps in reducing the changes in the distribution of each layer’s inputs during training, making the training process more efficient.

- Improving Generalization: It can lead to better performance on unseen data.

Differences from Other Normalization Techniques

- Batch Normalization (BatchNorm): Normalizes the input across the entire mini-batch, which can be problematic for tasks like style transfer where the statistical properties of the batch may vary significantly.

- Layer Normalization (LayerNorm): Normalizes all features in a layer for each instance, making it suitable for recurrent neural networks.

- Group Normalization (GroupNorm): Divides the channels into groups and normalizes them, striking a balance between BatchNorm and LayerNorm.

Example Use Case: Style Transfer

In style transfer, the goal is to apply the style of one image to the content of another. Instance Normalization is particularly effective here because it normalizes the statistics of each individual image, allowing the model to focus more on the stylistic features of each instance without being affected by other instances in the batch.

Summary

Instance Normalization helps to stabilize and improve the training of neural networks by normalizing each instance (or sample) independently within a mini-batch. It calculates the mean and variance for each feature map in each instance, normalizes the values, and then scales and shifts them using learnable parameters. This technique is particularly useful in tasks like style transfer where individual instance statistics are important.

Hard:

Instance Normalization is a technique used in deep learning, particularly within neural networks focused on processing visual data, such as images. It is a form of normalization applied at the level of individual instances or images, rather than across an entire dataset. The primary goal of Instance Normalization is to make the network’s training process more efficient and stable by standardizing the inputs to the network’s layers in a way that reduces the internal covariate shift.

To understand what Instance Normalization does, let’s break down a few key concepts:

- Internal Covariate Shift: This refers to the change in the distribution of the inputs to a layer in a neural network during training. As weights are updated, the distribution of outputs from one layer to the next can shift, making it difficult for the network to converge efficiently.

- Normalization: In a statistical sense, normalization typically involves adjusting values so that they fit a normal distribution. In the context of deep learning, normalization techniques like Instance Normalization aim to stabilize learning by adjusting the activations of layers to have a more predictable distribution.

- Instance Normalization Process: For each input instance (usually an image or a part of an image), Instance Normalization adjusts the mean and variance of the activations for each feature map. This is done independently for each instance, which means that the normalization is highly specialized to the specific content of the image.

- Effects and Usage: Instance Normalization has been found particularly useful in style transfer and generative adversarial networks (GANs) because it allows the network to focus on high-level features without being affected by the contrast or brightness of the input images. It helps in preserving the unique characteristics of each instance while still providing the benefits of normalization.

In summary, Instance Normalization is a technique that helps in stabilizing the learning process in deep neural networks by normalizing the activations on a per-instance basis. This makes the network more robust to variations in the input data, facilitates faster convergence, and can enhance the quality of the outputs, especially in applications involving visual style or appearance.

If you want you can support me: https://buymeacoffee.com/abhi83540

If you want such articles in your email inbox you can subscribe to my newsletter: https://abhishekkumarpandey.substack.com/

A few books on deep learning that I am reading:

Comments

Post a Comment